Have you ever felt like your AI projects were missing something… just that extra bit of flair? Well, I certainly did. Last month, I was chatting with a friend who works at a local robotics lab, and she said, “The future of AI isn’t just about words, but images, sounds, and experiences.” That got me thinking—and tinkering! So if you’re curious too, let me show you how to build a multi modal AI agent using n8n.

Introduction: Why We Need Multi Modal AI

Lately, artificial intelligence news has been all over the internet in tech publications. Everyone’s buzzing about how AI systems can’t just rely on text. They need to handle multiple forms of data—like images, audio, or even sensor readings—to become truly versatile. After all, humans don’t live by words alone.

In my view, multi modal AI feels like the next big leap. And since the open-source automation tool n8n makes it a breeze to chain different services together, we can do some wild stuff. Ready to dive in?

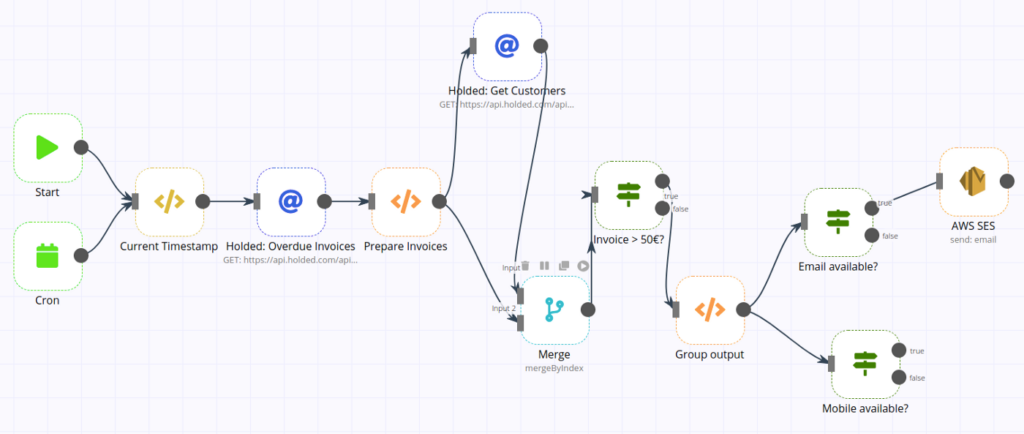

1. Understanding n8n as Your Workflow Wizard

Now, n8n might sound like a quirky brand of cereal, but it’s actually a mighty automation platform. You can think of it like a digital spiderweb, weaving different applications into a single workflow.

In fact, I first discovered n8n when searching for a replacement for expensive, off-the-shelf automation tools.

source: https://blog.n8n.io/

2. Setting Up Your Workspace

Alright, let’s get practical. First, install n8n on your local machine or cloud server:

1. Install Docker (if you prefer containers).

2. Run docker run -it –rm -p 5678:5678 n8nio/n8n to launch your n8n instance.

3. Create an n8n account (if you’re hosting it online).

Make sure you test that everything is running smoothly. You’ll want to see the n8n UI in your browser at http://localhost:5678.

And if you’re feeling a bit uncertain, don’t worry—I also had to restart my Docker container a couple of times before it worked flawlessly. It’s part of the process!

3. Integrating Your AI Models

Next up, we need some AI brains. You can connect different AI models to handle various data types:

- Text: Integrate an NLP model like GPT from an API provider.

- Images: Use a service like Hugging Face’s image analysis API.

- Audio: Connect to a speech recognition service, perhaps from Mozilla’s DeepSpeech.

For instance, if you’re building a chatbot that can also interpret uploaded photos, you’d create multiple n8n nodes. One node receives text data, another handles image data, and then each calls the respective AI service.

It might feel a bit complicated, but think of it like assembling a puzzle: each piece has a specific job, and n8n helps them fit together perfectly.

4. Designing the Workflow

Once your AI connections are ready, you’ll lay out your workflow:

1. Trigger Node – Start your workflow when a user sends a message or uploads an image.

2. AI Processing Nodes – One for text analysis, one for image classification, etc.

3. Decision Node – Route outputs based on certain conditions (e.g. if the user sends a cat photo, do X; if a dog, do Y).

4. Output Node – Send the results back to the user or store them in a database.

My friend Sam had a real laugh testing a similar flow for a pet rescue project. He said it felt like teaching an AI to “speak dog”—which, in a sense, it kind of does!

5. Testing and Troubleshooting

We all know that AI can throw odd results sometimes… or maybe that’s just me. Either way, it’s wise to run small tests:

- Check logs in n8n to see if your nodes are firing correctly.

- Test multiple data types, like an image of a cat and another of a horse.

- Adjust thresholds in your AI services if results are too noisy.

Don’t be alarmed if you get weird outcomes at first. I once had my model tag a chihuahua as a hamster—true story! A bit of fine-tuning usually sorts things out.

6. Going Live (With a Bit of Caution)

Finally, deploy your multi modal AI agent for real-world use. Whether you integrate it into a chatbot or add it to an app, remember that AI isn’t infallible. People often mention “Hallucinations” from large language models these days, so keep a close eye on user feedback.

If you’re planning to scale, ensure your server can handle the load. After all, there’s nothing more disheartening than having your brand-new AI agent crash during a live demo. Trust me… I’ve been there.

Conclusion: Stepping into AI’s New Frontier

And that’s it—your path to building a multi modal AI agent in n8n! It may sound like sci-fi, but so did touchscreen phones once upon a time. The exciting part is that we’re only scratching the surface. As AI develops further, we’ll see even wilder combinations of text, images, audio, and beyond.

I really believe that creating these multi modal experiences brings us closer to more natural, human-like interactions with technology. So go forth, experiment, and keep an open mind. After all, the best AI outcomes often spring from the most daring ideas.

This post was inspired by a video I watched on Leon van Zyl’s channel: https://www.youtube.com/watch?v=cTvaMD4Tt9Y